Where we come from

Like many startups, Applivery was born as a side project. It was a simple but ambitious service focus on the distribution of mobile applications in an efficient and simplified way.

Given its humble origins, the costs of the service had to be tightly controlled, so we decided to start by deploying the first versions on the most affordable and simple Cloud provider of the moment, DigitalOcean, and use also a simple storage system based on AWS S3.

The service was originally conceived as a single monolith whose processes, databases and services ran on a single droplet.

However, that simple but ambitious project gradually became a platform that had to support dozens of customers and thousands of daily active users. In addition, the ambition of the project began to incorporate numerous development ideas, resulting in an extensive roadmap that would extend over the next 2 years. Developing a product of that dimension on a single droplet was starting to not seem like such a good idea anymore. This is why we decided to grow the team and rebuild the project from scratch.

At that point, we decided to move into a microservices-oriented architecture, separating the frontend from the APIs. All services would be deployed in Docker containers through a sophisticated continuous integration pipeline supported on CircleCI. In an exercise to simplify the transition process between the old and the new version, we decided to keep DigitalOcean as the compute and DNS provider, but we took the opportunity to use external services for the database (MongoDB Atlas), the queuing system (CloudAMQP), and the Docker image registry (AWS ECR).

This new microservices system went live at the end of 2019 and made use of Dokku for container orchestration.

However, although we had made a great effort to rebuild and re-architect the entire platform, we were still serving all the traffic from a single virtual machine that acted as a bottleneck. That’s why, shortly after, we decided to make the leap to Kubernetes.

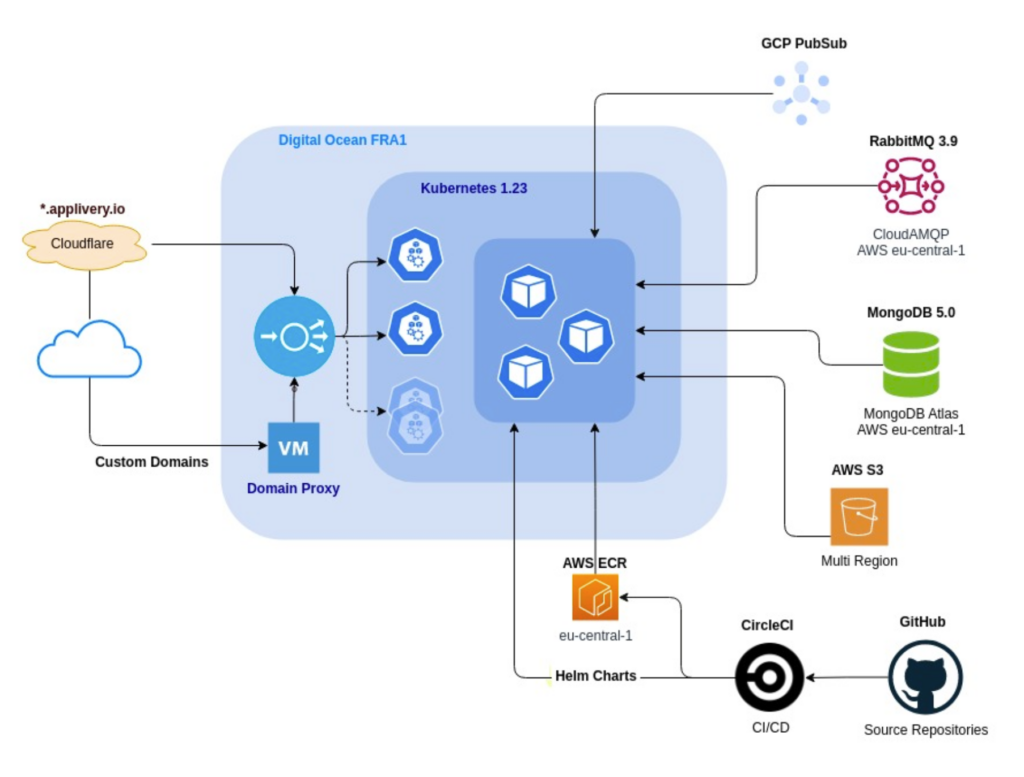

DigitalOcean was still the most affordable Cloud provider for a managed Kubernetes service, so we didn’t hesitate and migrated the container orchestrator to Kubernetes, adding numerous technical, architectural and security improvements along the way, including:

- Migration to TypeScript

- Infrastructure as code with Pulumi

- Versioned deployments with Helm

- Auto-scaling of machines and services

- Self-renewable certificates through LetsEncrypt

- Deployments with zero-downtime and auto-rollbacks

- Geographical clustering of computing services and external services (MongoDB Atlas, CloudAMQP, etc) in Frankfurt

- Migration of DigitalOcean domain management to CloudFlare as DNS and Firewall service

Likewise, to provide the custom domain service in the App Stores we used another virtual machine with an elastic IP, because DigitalOcean did not provide a NAT gateway to control the outgoing IP of the workers.

At that point, the architecture looked like the following:

Growing faster than expected

This whole system built mainly on DigitalOcean and supported by AWS worked decently and with very controlled costs for many months. However, as the volume of customers grew and consequently, the traffic, we started to suffer from occasional problems that we could not control or foresee:

- The DNS resolution system of the workers experienced occasional problems that we could not handle.

- We suffered crashes of the Kubernetes control plane, either due to forced updates or internal DigitalOcean problems.

- At certain times, the transfer rate between our network at DigitalOcean and AWS S3 would drop to as low as a few KB/second, making the build upload service unusable for long periods of time.

At that time (early 2021) we were implementing what would be Applivery’s major evolution: Applivery Device Management. These evolutions also made us go through a complete re-architecture of our management dashboard and company branding to orient it towards a better user experience.

However, despite all efforts, the infrastructure had proven to be complex and sometimes, unstable. Our ambitious expansion plans and product evolution were severely hampered by the time investment in troubleshooting and incident resolution.

We needed to have greater control over the Kubernetes managed service and improved network stability was a must. In addition, given the expansion of the service and product roadmap, we wanted to take advantage of this opportunity to continue to improve our platform in the following business-critical areas:

- Reduce the number of external services and dependencies.

- Improve the security of all connections and communications between systems.

- Increase observability to prevent and anticipate potential problems.

- Searching for a geographic region that is more suitable for our service and that will guarantee that we will be able to support current and future demand.

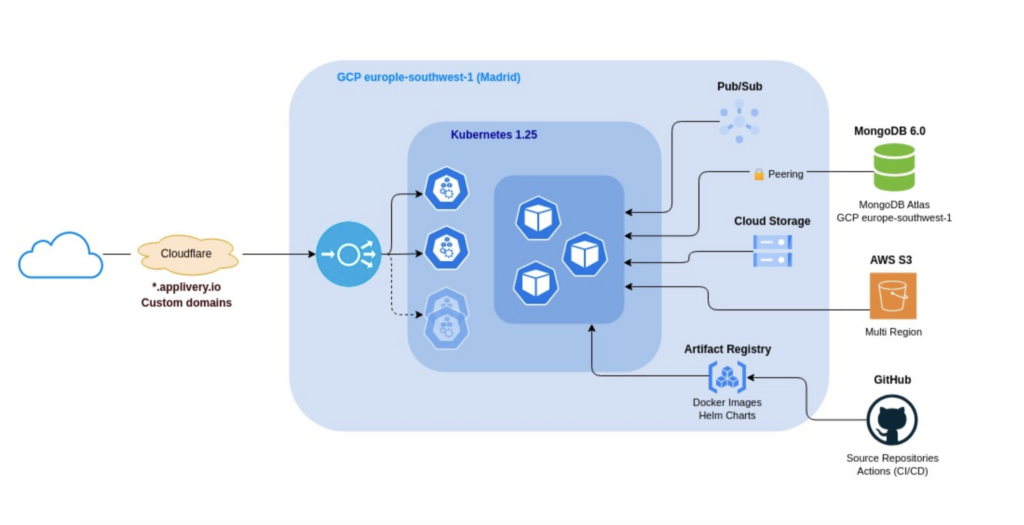

The jump to Google Cloud Platform

At this point, we had decided that we wanted to leave DigitalOcean and move to a hyperscaler more suited to our reality.

After some weeks investing time in proof of concepts on Amazon Web Services (AWS), Google Cloud Platform (GCP) and Azure, and despite the fact that the technical team’s experience in Cloud environments was mainly focused on AWS, we ended up opting for GCP. The reasons were several:

Greater control over the Kubernetes managed service.

The quality and ease of use of Google Kubernetes Engine (GKE) surprised us from the first moment we tried it. The amount of options and observability it offers by default over the cluster is the best we had tested so far.

Improve network stability

Google gives us the option to use its own network with global connectivity, which provides much better performance and latency than the standard network, resulting in a better experience for all users anywhere in the world.

Reduce the number of external services

Both AWS and GCP offered us a lot of options to internalize the external services we were using but due to our strong integration with the Android ecosystem, we were already strongly integrated with some GCP services (such as Pub/Sub or the Android Management API). On top of all this GCP offered other unique services like BigQuery, Analytics, Workspace or Maps that we planned to use in the near future.

Securing all connections

Due to the limitations we were experiencing at DigitalOcean, we had ample room for improvement in the security aspects. We identified that GCP would help us in two main aspects: first, the VPC peering functionality with automatic import and export of routes would greatly simplify our integration with MongoDB Atlas. Secondly, it offered us the ability to authenticate without key exchange from GitHub Actions via Workload Identities, avoiding the exposure of any kind of access keys.

Increase observability

Previously we had integrated Grafana and Prometheus to manage monitoring and observability, services that ran within the cluster and that we had to maintain. With GKE this was no longer necessary, because the console itself already offered everything we needed, such as access to logs, node and pod visibility, graph dashboards or alerts.

Switching to a more suitable region for our service

Finally, GCP was going to launch its new region here in Madrid, Spain (europe-southwest1), so following our hallmark and after several tests, we went for it. After testing we concluded that we substantially improved latency throughout the Americas without affecting Central Europe or the rest of the world compared to our previous region located in Frankfurt.

How did it go?

We started by rebuilding our Pulumi recipes to build a complete new pre-production environment, making migrations to multiple GCP services, among them:

-

We changed the RabbitMQ queuing system to GCP Pub/Sub.

-

We moved Docker images and Helm charts from AWS ECR and our ChartMuseum to GCP Artifact Registry.

-

Assigned elastic IPs to the balancer and NAT gateway, which gives us a more reliable and more reliable service by controlling our inbound and outbound IPs.

-

We changed our observability stack to GCP Monitoring, Logging and Trace services.

Also, thanks to the options provided by GCP, we also made changes to our complementary services:

-

We connected the Cloudflare Zero Trust VPN to our new private network

-

We moved our MongoDB Atlas cluster to the same region as GKE, connecting it by VPC Peering to the private network.

-

We migrated our CI/CD from CircleCI to GitHub Actions, taking advantage of the fact that we already had the code hosted there. We got more efficient, secure and integrated flows with the development teams.

- We migrated our custom domain service to Cloudflare Custom Hostnames.

Once the whole stack was tuned and verified, we proceeded to set up the productive environment in a matter of minutes thanks to Pulumi’s recipe. We also started a live migration process of MongoDB Atlas to the new region.

When everything was ready, we stopped the deployments of the old cluster, finished the live migration in MongoDB Atlas and changed the DNS in Cloudflare. All the traffic was already arriving to the new environment. In total we achieved less than one minute of downtime.

What benefits this new architecture has brought us

After all the migration we have managed to improve in all measurable aspects, such as response times, transfer speed or processing capacity.

Security

Improved network security and service intercommunication, being able to avoid external communications.

Contingency

Improvements in security and contingency against attacks thanks to the CloudFlare Firewall. Multi-regional high availability (HA) thanks to GKE multi-cluster Ingress.

Performance

Improved performance in the processing of builds of up to 2x and the ability to parallelize processing.

User Experience

Improved user experience by significantly reducing response times of our services. The average has decreased from 120ms to 30ms, and in Madrid, we have even achieved response times below 5ms.

Response Times

Reduced database query and response times, as they are in the same region and communicate through a private network at 2x.

CI/CD Processes

Acceleration of CI/CD processes and, consequently, of our continuous delivery capabilities through a more centralized development experience via GitHub.

Conclusions

Applivery has experienced a constant evolution since its birth, new needs and functionalities are continuously being developed. We have always maintained a commitment to innovation, adapting to business requirements to continue to stay at the forefront of technology and offer a reliable and scalable service to all our customers.

This latest iteration with GCP is the latest example of this, making a great effort at all levels to consolidate the foundations of our platform, ensuring its long-term robustness and allowing us to continue to innovate in an agile and sustainable way over time.

What’s next? One shot to the one that says generative AI 😀